Challenging the Boundaries of Confidential Computing for AI

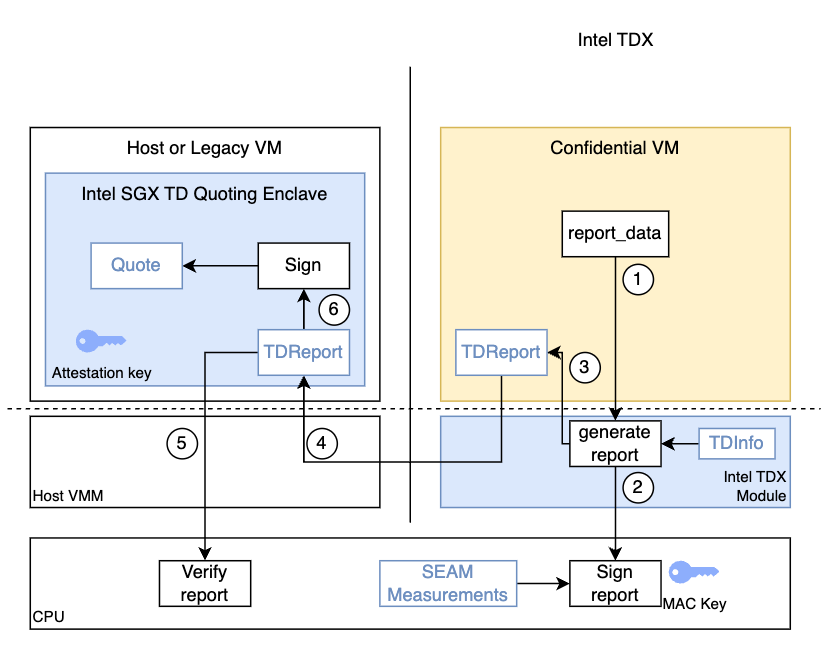

CENSUS has conducted an in-depth technical evaluation of Confidential AI workloads on Google Cloud Platform (GCP), focusing on the integration of Intel Trust Domain Extensions (TDX) and NVIDIA H100 GPUs within Confidential Virtual Machines (CVMs). The assessment explored whether hardware-based attestation could be extended consistently across both CPU and GPU components and whether a verifiable trust model could be maintained end-to-end. The work was performed on A3 instances, implementing end-to-end attestation flows, validating quote and key hierarchies, and operating under realistic cloud deployment constraints.

The report confirms that foundational attestation and isolation guarantees operate as intended, but also highlights security inefficiencies that must be addressed for secure AI adoption at scale. Key findings include limitations in firmware transparency (e.g., TDVF measurement without source availability), the lack of revocation mechanisms for in-VM key storage models, incomplete MIG (Multi-Instance GPU) enablement in cloud environments, and the introduction of new trust boundaries via vendor-managed APIs. These observations reflect the challenges of aligning evolving Confidential Computing capabilities with real-world operational demands in cloud infrastructure.

The insights from this evaluation are highly relevant for platform architects, cloud security engineers, and decision-makers building trustworthy AI systems. As confidential computing continues to gain traction, the ability to perform reproducible, verifiable attestation across heterogeneous compute stacks will become foundational. CENSUS shares this report to support the advancement of secure, privacy-preserving, and Zero Trust-aligned AI infrastructure.

The full report can be downloaded here.