An introduction to the LightBulb Framework

This blog post serves as a followup to my summer B-Sides Athens 2017 talk entitled “Lightbulb framework – shedding light on the dark side of WAFs and Filters”.

The “LightBulb Framework” is an easy to use black box tool for generating expressions through automata learning that evade Web Application Firewalls (“WAFs”) and filters, allowing penetration testers to exploit SQL injections, XSS or other vulnerabilities regardless of the presence of the WAF/filter. It is distributed under a free license (MIT License) and is also offered as an extension for the PortSwigger Burp Suite web proxy software.

LightBulb’s core algorithms were first presented at the “2016 IEEE Symposium on Security and Privacy” [1] and the “2016 ACM SIGSAC Conference on Computer and Communications Security” [2] conferences. The first implementation of the framework was presented at BlackHat Europe 2016 [3] while the graphical extension for Burp Suite was released during BSides Athens 2017 [4]. To date, the Lightbulb framework has identified 18 expressions [5] that bypass popular open source WAFs such as ModSecurity CRS, PHPIDS, WebCastellum, and Expose.

Machine Learning, GOFA and SFADiff

Most common web application attacks belong to the family of code injection attacks where a user-provided attack payload (e.g., XSS, SQL) is being executed either at a server-side interpreter (e.g. the database) or a client-side one (e.g. the browser). Modern WAFs attempt to mitigate such attacks, by filtering web traffic using rulesets of attack payload signatures.

WAFs are essentially parsers, limited by the available attack rulesets. It is very difficult to detect a web vulnerability without proper knowledge of the context in which the payload will be used and thus, it is impossible to design rulesets that cover all known and unknown malicious payloads. Hence, attackers have a strong chance of coming up with expressions that bypass a WAF. Can these expressions be generated in an automated way? Learning algorithms can help here. They permit the analysis of such filter programs remotely, by querying the targeted programs and observing their output.

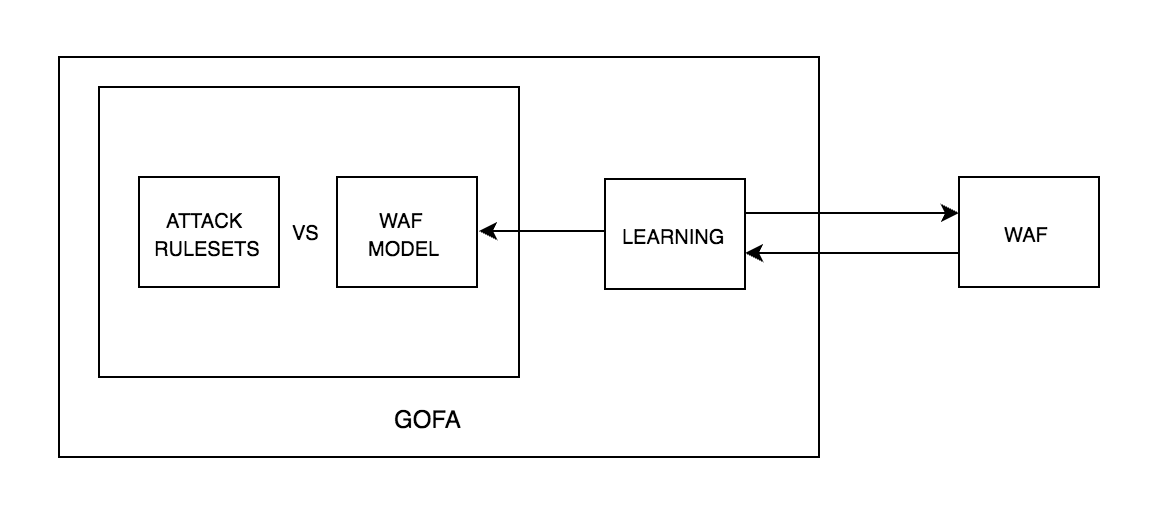

GOFA is an offensive technique that utilizes the ASKK learning algorithm, proposed by Argyros et al. [1], to infer the model of a targeted WAF and identify a potential bypass. GOFA, equipped with an attack ruleset (in the form of regular expressions or grammars), can be used by a remote auditor to find a WAF-evading expression (or obtain an approximation of the filter model that can be used for further offline testing and analysis).

However, the supplied attack rulesets (e.g. a SQL injection grammar) might not contain all the expressions that are valid for the specific interpreter of the protected service (e.g. a MySQL v5.5 database). Indeed, a given interpreter instance may accept expressions that are far beyond our expectations. For example, most web browsers do not strictly follow the HTML standard, thus making the use of a generic XSS attack ruleset somewhat limiting.

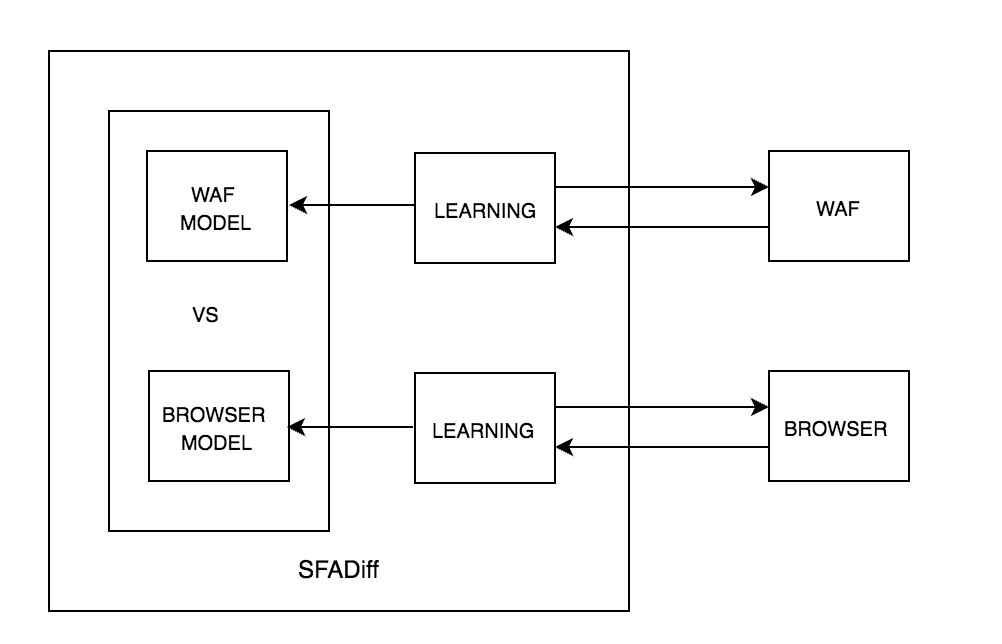

This is where the SFADiff algorithm comes into play. The SFADiff algorithm will also infer the model of the protected interpreter and compare this to the model of the filter. Any recorded discrepancy between the input parsing logic of the targeted filter and that of the interpreter is essentially an expression that can be used by an attacker to evade detection but still execute something which is considered valid (e.g. a malicious payload) by the protected interpreter. By detecting these discrepancies automatically, SFADiff alleviates the need for an attack ruleset input. A small (yet relevant) seed input containing partially known or speculated expressions of the protected interpreter’s specification (e.g. an HTML image tag) is only required in order to be used as template to bootstrap the learning procedure.

Penetration Testers that have a satisfactory knowledge of the protected application’s interpreter, and thus sufficient/accurate attack rulesets, can use GOFA to quickly identify evading expressions. Researchers who are interested more in discovering evasion expressions that lie outside the expected standards of the application interpreter can use the (slower) SFADiff technique.

The SFADiff algorithm can also be used to automatically find differences between a set of programs with comparable functionality (e.g., WAFs, Browsers). These differences can be used as fingerprints for program identification. Compared to other tools, these fingerprints are not mere signatures of artifacts; they are core discrepancies of the program interpreters that cannot be easily concealed.

You can download the LightBulb Framework at: https://github.com/lightbulb-framework/lightbulb-framework

Example #1: Using GOFA to assess ModSecurity CRS 2.99 against SQL injections

In this example, we evaluate the effectiveness of ModSecurity against SQL injections using a grammar containing valid SQL query suffixes as input. We forward a request to the LightBulb’s Burp extension, select the “query_sql” sample grammar from the “Learning/Tests” tab and press “Start Learning”. As soon as a filter bypass is found, we remove it from the current grammar and perform the same operation again to find a new one:

Example #2: Using SFADiff to assess PHPIDS 0.7 against XSS attacks

In the following example, we use SFADiff to discover an XSS bypass in PHPIDS version 0.7. Here, we allow SFADiff to infer the model of our browser and provide only an input HTML regex as a seed. Again, we forward a request to the LightBulb’s Burp extension, select the “Browser/html_p_attribute” sample regular expression from the “Learning/Seeds” tab and press “Start Filter Differential Learning with Browser”. A popup informs us to navigate with our browser to a specific listening port that performs the learning procedure:

Example #3: Using SFADiff to create a distinguish tree for ModSecurity, PHPIDS 0.7 and PHPIDS 0.6

Finally, we use SFADiff to automatically generate a distinguish tree for ModSecurity and two known versions of PHPIDS, that contains input payloads that are either accepted or rejected by the targeted WAF. At the end, we use the generated tree to fingerprint these WAFs and verify its correctness:

References

- G. Argyros, I. Stais, A. Kiayias and A. D. Keromytis, "Back in Black: Towards Formal, Black Box Analysis of Sanitizers and Filters," 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, 2016, pp. 91-109. doi: 10.1109/SP.2016.14

- G. Argyros, I. Stais, S. Jana, A. D. Keromytis, and A. Kiayias. 2016. SFADiff: Automated Evasion Attacks and Fingerprinting Using Black-box Differential Automata Learning. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security (CCS '16). ACM, New York, NY, USA, 1690-1701. doi: 10.1145/2976749.2978383

- G. Argyros, I. Stais. Another Brick of the Wall: Deconstructing WAFs using Automata Learning, Black Hat Europe 2016 (Slides)

- I. Stais. Shedding Light on the Dark Side of WAFs and Filters, BSides Athens 2017(Slides)

- Discovered Vectors, https://lightbulb-framework.github.io/vectors/